Blueprint for an AI Bill of Rights

Artificial Intelligence and Human Rights

Artificial Intelligence has many benefits, including the technology used by farmers to grow food more efficiently or the algorithms used in healthcare to identify diseases in patients. Big Data is revolutionizing industries worldwide and AI-powered tools are driving decision-making across industries. Together, these tools have the power to revolutionize society and make life better for everyone. However, there is also a darker side to this revolution.

Technology, data, and automated systems pose a potential threat to democracy and people’s rights. These tools are often used to curb our access to opportunities and critical resources. Systems that are supposed to help people with healthcare have proven to be unsafe, ineffective, or biased. Algorithms employed in staffing often reproduce the bias and discrimination with which they had been designed. Data collected by social media platforms without the users’ consent often gets used to undermine their privacy. Although these outcomes are detrimental, with the right legislation, they can be avoided.

How to Make AI Safer

President Biden voiced his concern regarding privacy on the very first day of his presidency. He said that privacy forms “the bases for so many more rights that we have come to take for granted that are ingrained in the fabric of this country.” (Remarks made by President Biden on the Supreme Court Decision to Overturn Roe v. Wade on June 24, 2022). Following his lead, the White House Office of Science and Technology Policy identified five principles that should guide the design, use, and deployment of automated systems to make them safe for the American public. These principles are as follows:

Safe and Effective Systems

Diverse communities, stakeholders, and domain experts should be consulted while developing automated systems to identify concerts, risks, and potential impacts of the system. Pre-deployment testing, risk identification and mitigation, and ongoing monitoring should be carried out to ensure that all systems are safe and effective for their intended use. Use of a system outside of its intended use should be prevented. Adherence to domain-specific standards must be ensured. If an automated system does not measure up to these standards, it should not be deployed or removed if already in use. Systems should be evaluated by an independent body and the findings made public.

Algorithmic Discrimination Protections

Algorithmic discrimination occurs when an algorithm discriminates based on race, color, ethnicity, sexual orientation, pregnancy or postnatal period, religion, age, nationality, or any other classification protected by law. Designers and developers should take measures to ensure that their algorithm does not discriminate against any part of the community. Proactive equity assessments should be carried out to ensure accessibility for people with disabilities. Algorithmic impact assessment should be carried out by an independent body and results should be made public whenever possible.

Data Privacy

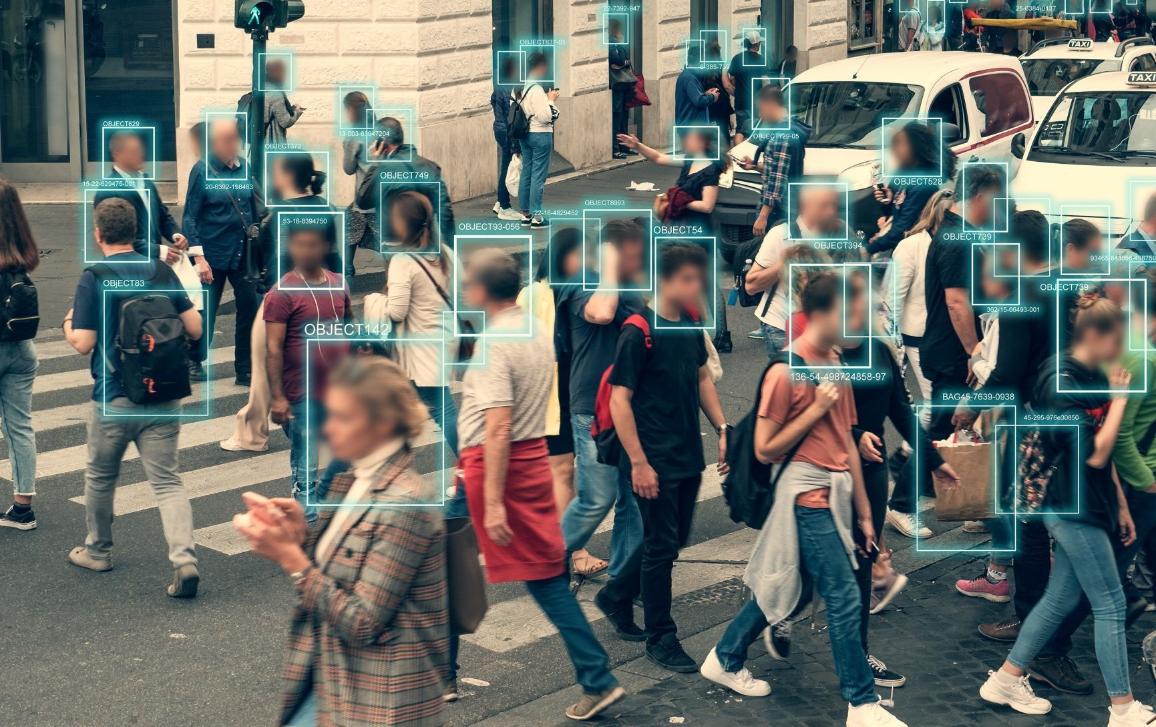

Data collection should conform to reasonable expectations and only data necessary for that particular context should be collected. Designers, developers, and deployers of automated systems should seek the consent of users before collecting, using, accessing, transferring, and deleting user data. Where consent cannot be received, utmost care should be taken to safeguard privacy. UX/UI should not influence users’ choice to permit data collection through default settings. Consent should only be asked where it can be appropriately and meaningfully given. Consent requests should be easily eligible, plainly described, and brief. The context and details of the kind of data should be specified. Sensitive domains, such as healthcare, education, criminal justice, and finance, should have additional oversight. Surveillance technologies should be assessed for potential harm before deployment. Continuous surveillance should be avoided in educational and work settings. Users should have access to reporting related to their data collection.

Notice and Explanation

Designers, developers, and deployers of automated systems should provide documentation in clear and precise language that is jargon-free. This documentation should provide an overview of the system functionality as well as the role that AI plays. Details of ownership of the automated system should be provided as well. The users impacted by the system should be made aware of the impact the automated system has on them. All information should be kept up-to-date and users should be immediately notified of any important changes.

Human Alternatives, Consideration & Fallback

Where appropriate, users should be given the choice to opt out of an automated system and interact with a human. The appropriateness of a situation should be decided based on reasonable expectations of the user from a digital experience. In some instances, the law requires the availability of a human respondent. Timely access to a human alternative should be ensured in case a user wants to contest the response of an automated system. Automated systems in use within sensitive domains should have human staff that is sufficiently trained. Information regarding these human governance processes should be made available whenever possible.

Conclusion

These five principles form the basis of the AI Bill of Rights and form a blueprint for the protection of the public from harm. The steps taken to implement this framework should be in proportion to the level and nature of potential harm to users, opportunities, and access.